Webscraping by example

Max Maischein

Frankfurt.pm

Overview

-

What is Web Scraping?

-

Examples of scraping web pages

-

Considerations for implementing your own scraper

Who am I?

-

Max Maischein

-

DZ BANK Frankfurt

-

Deutsche Zentralgenossenschaftsbank

-

Information management

My Leitmotiv

Automation

-

If I can do it manually

-

... then the computer can repeat it

-

... correctly every time

My Environment

DZ BANK AG

Intranet web automation and scraping (WWW::Mechanize::Firefox)

My Environment

DZ BANK AG

Intranet web automation and scraping (WWW::Mechanize::Firefox)

-

People ask about web scraping

-

People ask about HTML parsing

What Is Web Scraping?

Web Scraping is

-

the automated process of

-

extracting data

-

from resources served over HTTP

-

and encoded as HTML

-

(or Javascript)

What you need to know

-

Perl

-

A bit of HTTP

-

A bit of HTML

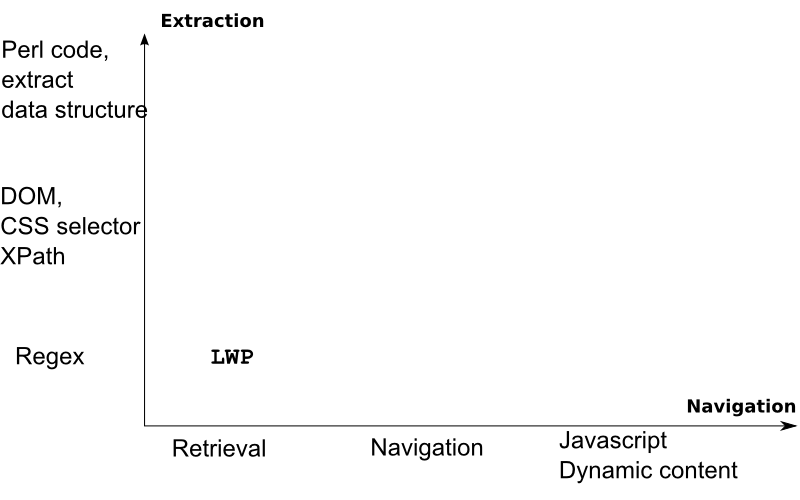

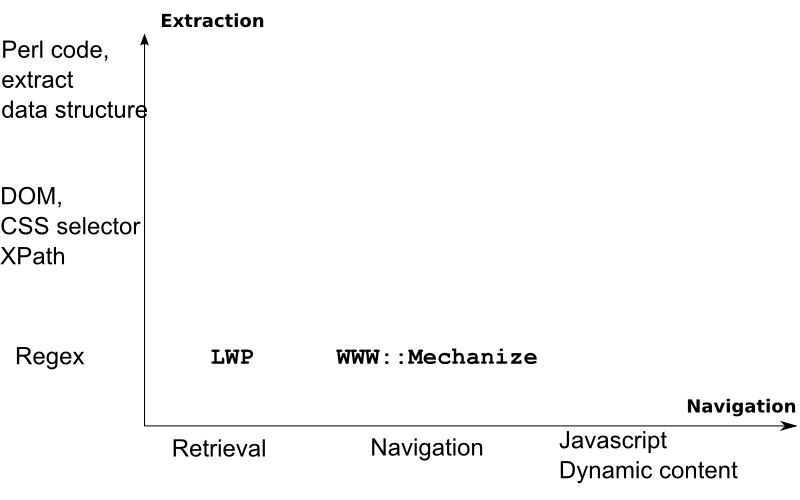

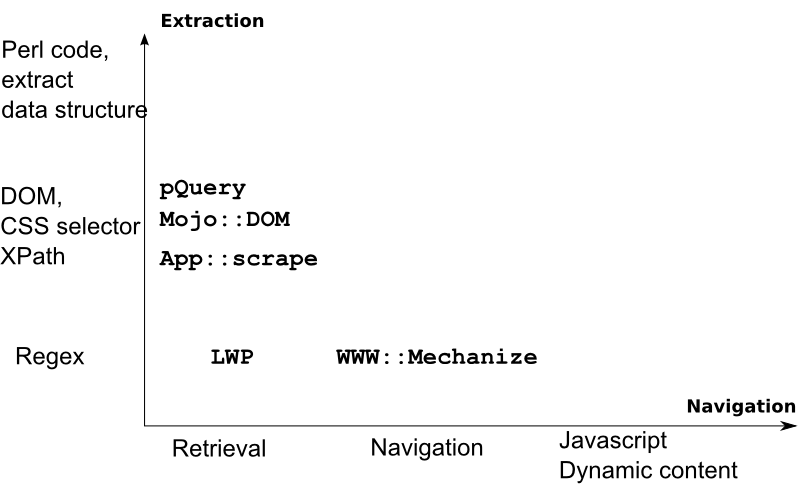

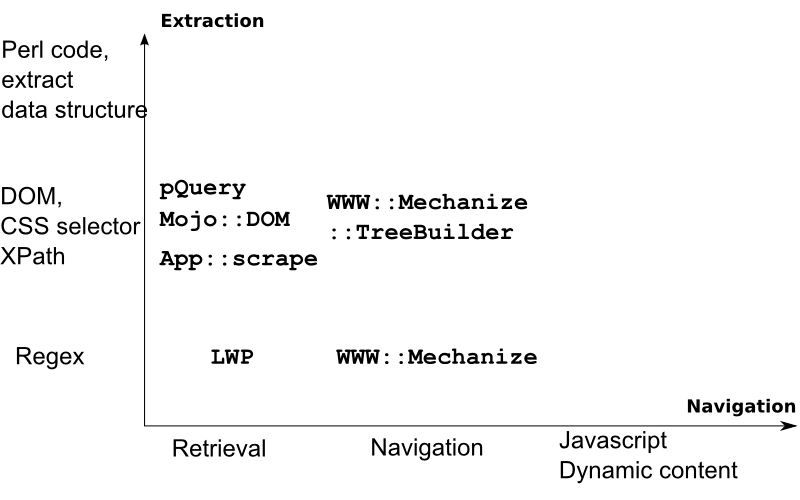

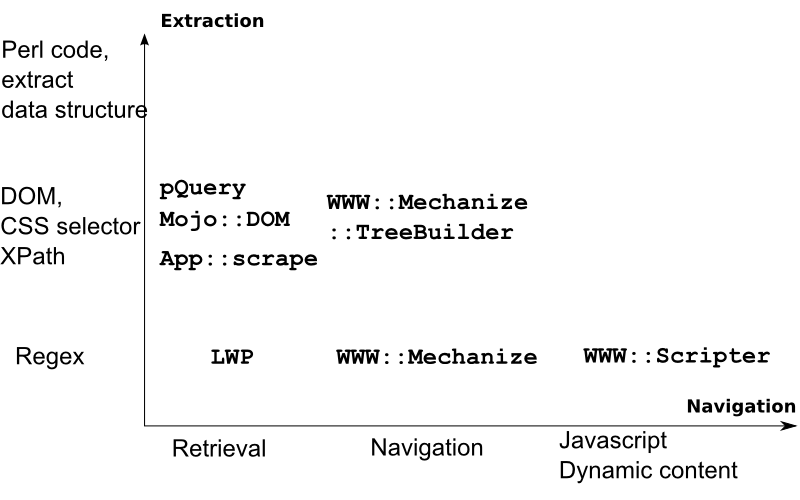

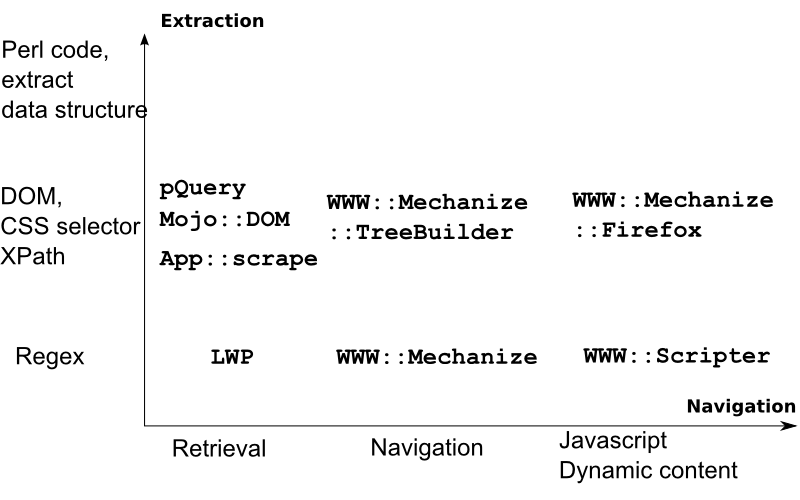

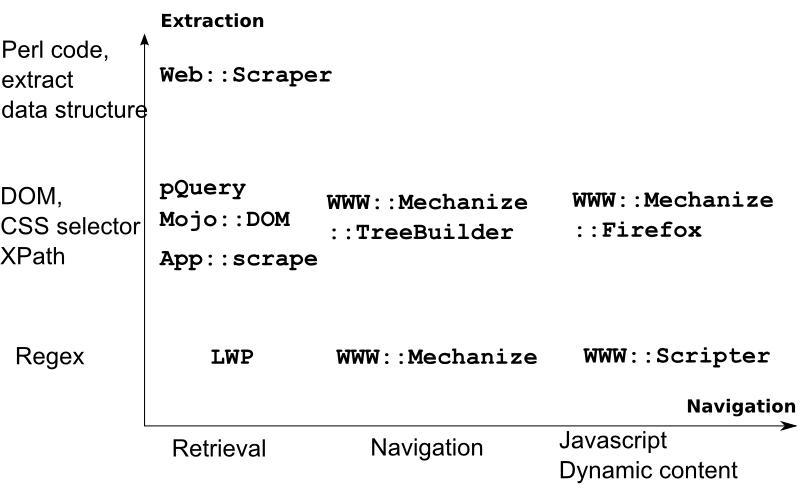

Concepts and Modules

-

WWW::Mechanize::Firefox::DSL

-

Shared API with others

-

WWW::Scripter, WWW::Mechanize

-

Same concepts elsewhere

-

Mojo::DOM.

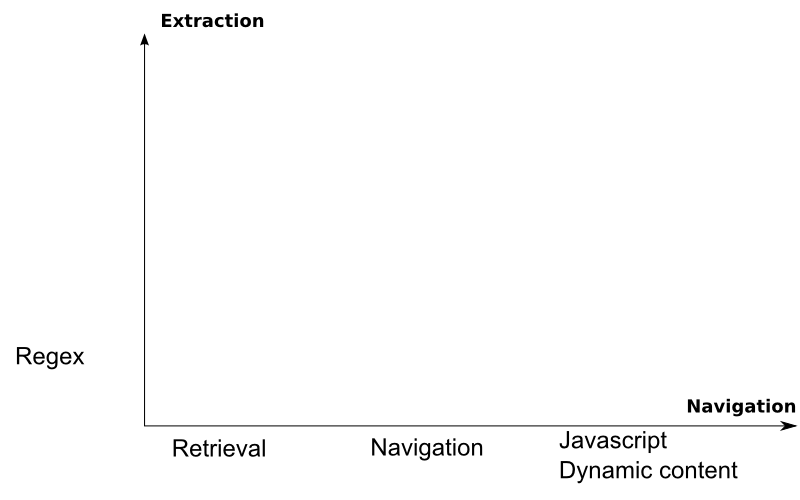

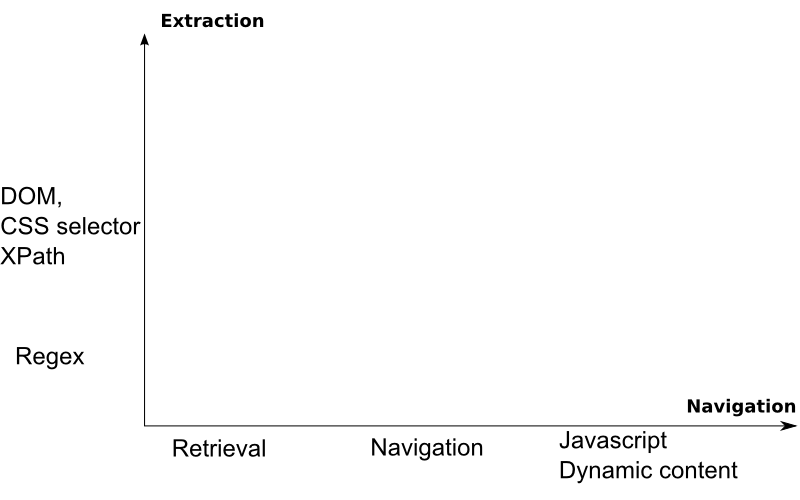

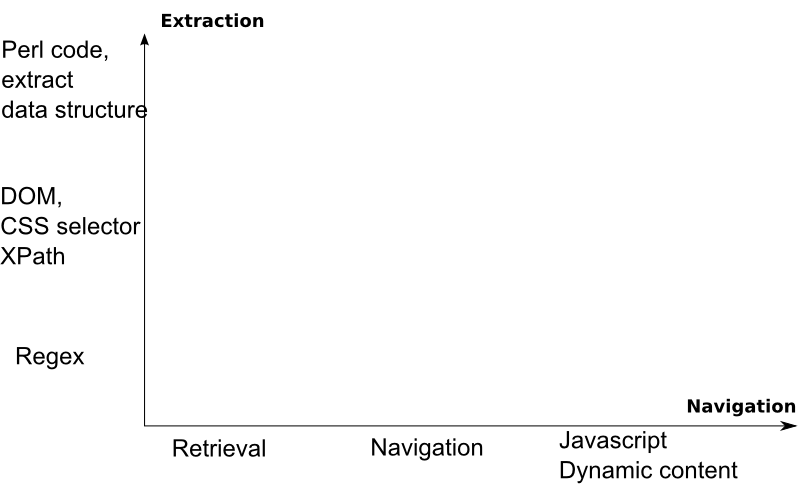

Aspects of Web Scraping

Not everything that is technically possible is also allowed or desired

-

Can you avoid scraping?

1: Database Access 2: Database dump

-

Is scraping allowed?

1: Terms of Service (TOS) 2: TOS applicable?

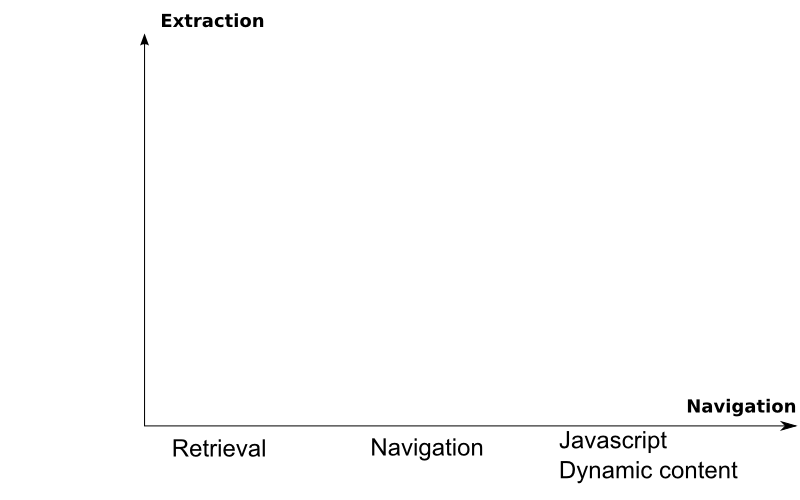

Process

-

Identify the steps a human takes

-

->Navigation -

->Content Extraction -

Automate these steps

-

Repeat

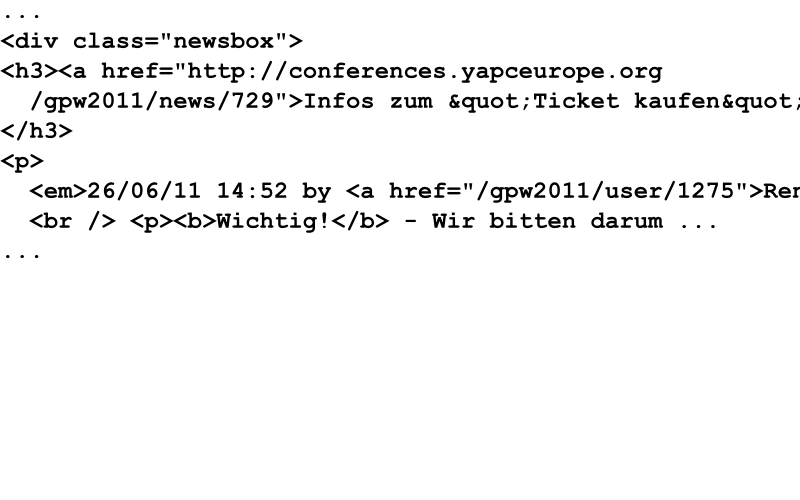

Example: Extracting news posts

-

13th German Perl Workshop (19th to 21st of October 2011)

-

Need to stay abreast of news posts

-

Only extraction, not navigation

Look at the page

Print the page HTML

1: #!perl -w

2: use WWW::Mechanize::Firefox;

3: my $mech = WWW::Mechanize::Firefox->new();

4:

5: $mech->get('http://conferences.yapceurope.org/gpw2011');

6: print $mech->content();

Some simplicity

WWW::Mechanize::Firefox

1: #!perl -w

2: use WWW::Mechanize::Firefox;

3: my $mech = WWW::Mechanize::Firefox->new();

4:

5: $mech->get('http://conferences.yapceurope.org/gpw2011');

6: print $mech->content();

With WWW::Mechanize::Firefox::DSL

WWW::Mechanize::Firefox::DSL

1: #!perl -w

2: use WWW::Mechanize::Firefox::DSL;

3:

4:

5: get('http://conferences.yapceurope.org/gpw2011');

6: print content();

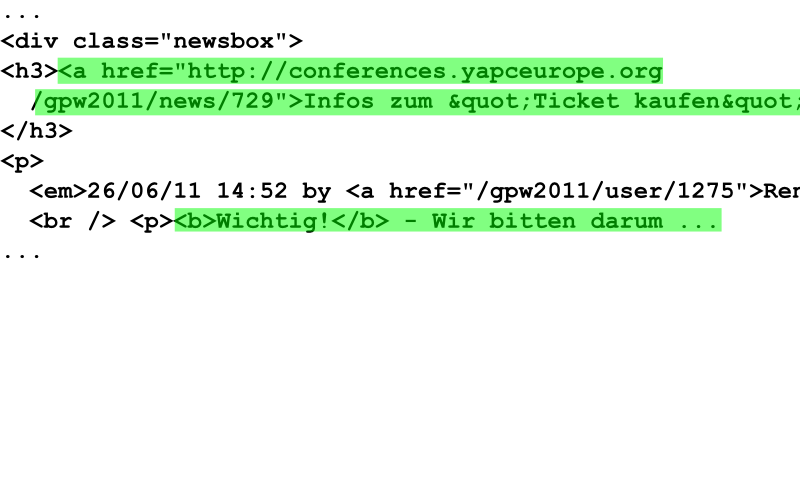

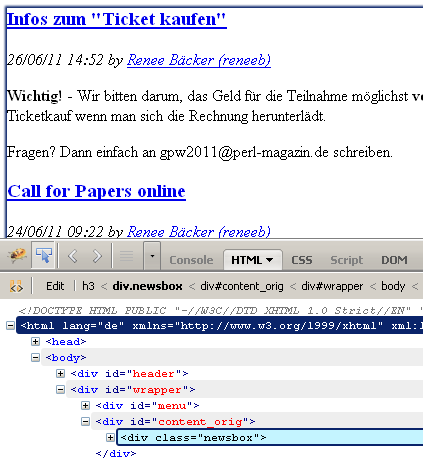

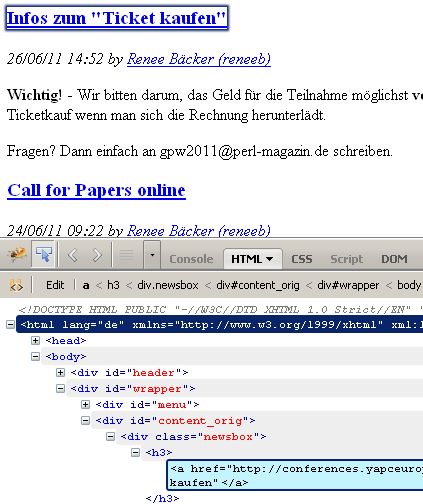

Web Page

Web Page

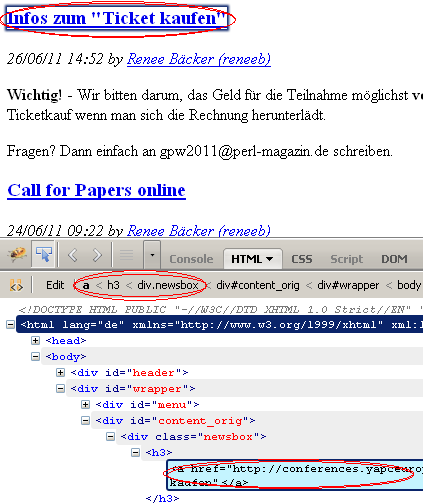

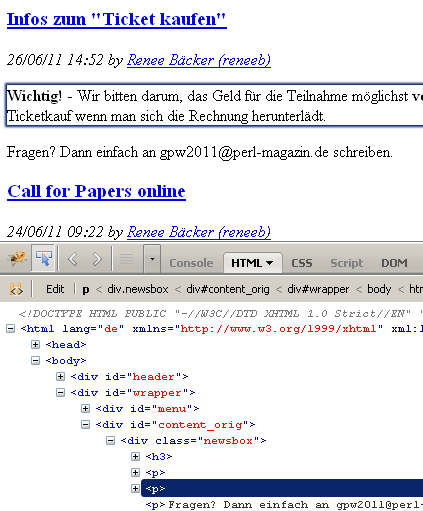

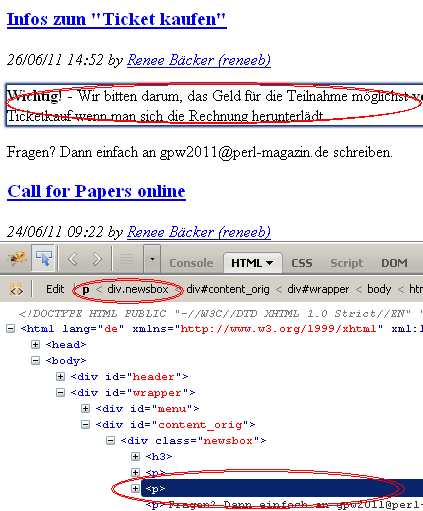

Firebug

Firebug

-

Add-on for Firefox

-

Visual tool to inspect elements on the web page

Our goal

Extract news posts

First CSS selector

1: .newsbox

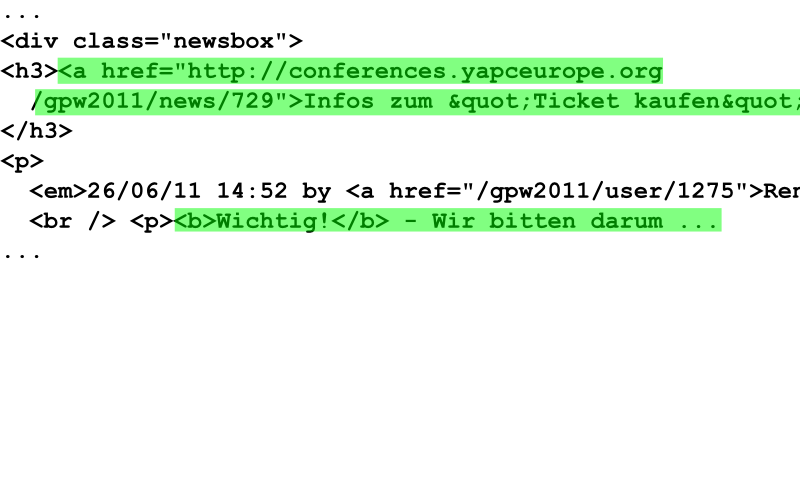

Refined CSS selector

1: .newsbox h3 a

Refined CSS selector

1: .newsbox h3 a

Refined CSS selector

1: .newsbox h3 a 2: Call for Papers online

Refined CSS selector

1: .newsbox h3 a 2: Call for Papers online 3: 4: .newsbox h3+p+p 5: Endlich ist es so weit ...

Perl script

1: #!perl -w

2: use strict;

3: use WWW::Mechanize::Firefox::DSL;

4:

5: get_local('html/gpw-2011-news.htm');

Perl script

1: #!perl -w

2: use strict;

3: use WWW::Mechanize::Firefox::DSL;

4:

5: get_local('html/gpw-2011-news.htm');

6:

7: my @headline = selector('.newsbox h3 a');

8: my @text = selector('.newsbox h3+p+p');

Perl script

1: #!perl -w

2: use strict;

3: use WWW::Mechanize::Firefox::DSL;

4:

5: get_local('html/gpw-2011-news.htm');

6:

7: my @headline = selector('.newsbox h3 a');

8: my @text = selector('.newsbox h3+p+p');

9:

10: for my $article (0..$#headline) {

11: print $headline[$article]->{innerHTML}, "\n";

12: print "---", $text[$article]->{innerHTML}, "\n";

13: };

Demo

1: demo/01-scrape-gpw-2011.pl

Refining the extraction

-

Command line might be more convenient

-

Command line XPath extractor like

-

Mojolicious

::get -

App::scrape

-

WWW::Mechanize::Firefox/examples/scrape-ff.pl

Summary

The example showed

-

Data extraction

-

using CSS selectors

-

WWW::Mechanize + HTML::TreeBuilder::XPathEngine + HTML::XPath::Selector

-

WWW::Mechanize::Firefox

-

App::scrape

-

Mojo::DOM

More Complex Example situation (Wikipedia)

-

Extract images of (DC) Super Heroes from Wikipedia

-

Input: Name of Super Hero

-

Output: Image URL (or data) of Super Hero Image on Wikipedia and description text

Sample Session

-

Enter Super Hero name (Navigation)

-

See description on resulting page (Extraction)

-

See picture on resulting page (Extraction)

First stab

1: use WWW::Mechanize::Firefox::DSL; 2: use strict; 3: 4: # Navigation 5: my ($hero) = @ARGV; 6: my $url = 'http://en.wikipedia.org'; 7: get $url; 8: print content; # are we on the right page?

First stab

1: use WWW::Mechanize::Firefox::DSL; 2: use strict; 3: 4: # Navigation 5: my ($hero) = @ARGV; 6: my $url = 'http://en.wikipedia.org'; 7: get $url; 8: print content; # are we on the right page? 9: 10: print title; 11: # Wikipedia, the free encyclopedia ...

Check for "where we are"

Important, not now, but later when the website changes

1: my ($hero) = @ARGV;

2: my $url = 'http://en.wikipedia.org';

3: get $url;

4: on_page("Wikipedia, the free encyclopedia");

Check for "where we are"

Important, not now, but later when the website changes

1: my ($hero) = @ARGV;

2: my $url = 'http://en.wikipedia.org';

3: get $url;

4: on_page("Wikipedia, the free encyclopedia");

5:

6: ...

7: on_page("$hero - Wikipedia");

8:

9: sub on_page {

10: my ($expected) = @_;

11: if (title !~ /\Q$expected\E/) {

12: croak sprintf "Wrong page [%s], expected [%s]",

13: title, $expected;

14: };

15: };

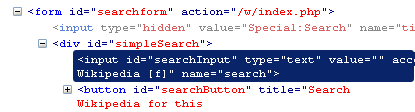

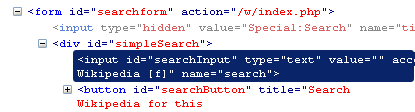

Fill in the field

-

Find the field

1: search

Fill in the field

-

Find the field

1: search

-

Find the field name

Fill in the field

-

Find the field

1: search

-

Find the field name

1: submit_form with_fields( {

2: search => $hero,

3: });

Where are we now?

1: print title; 2: 3: # Superman - Wikipedia, the free ...

Where are we now?

1: print title; 2: 3: # Superman - Wikipedia, the free ... 4: 5: on_page($hero);

Counterexample

1: Plastix man

Counterexample

1: Plastix man 2: 3: ... 4: Wrong page [Plastix man - Search results -...]

What do we have now?

-

Enter Super Hero name (Navigation)

-

See description on resulting page (Extraction)

-

See picture on resulting page (Extraction)

Find the description

-

Find the text selector (Firebug, App::scrape,

examples/scrape-ff.pl)1: .infobox +p

-

Extract it

1: print $_->{innerHTML} 2: for selector('.infobox +p');

Find the image

-

Find the image selector (Firebug, ...)

1: .infobox a.image img

-

Extract it

1: print $_->{src} 2: for selector('.infobox a.image img'); 3: 4: # ttp://upload.wikimedia.org/wikipedia/en/thumb/7/72/Superman.jpg/250px-Superman.jpg

Summary

The example showed

-

Site navigation

-

Data extraction using CSS selectors

-

Navigation validation checking

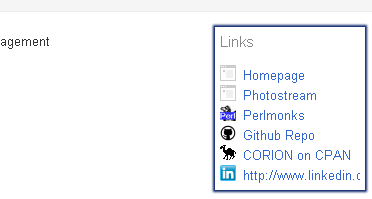

Example: Extracting Google+ profiles

-

Google+ is Google's (second attempt at a) social network

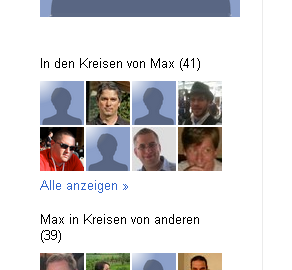

Steps

-

Search with Google for the name

-

Extract the information from the profile page

-

Extract "people in circles"

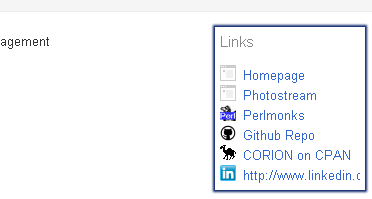

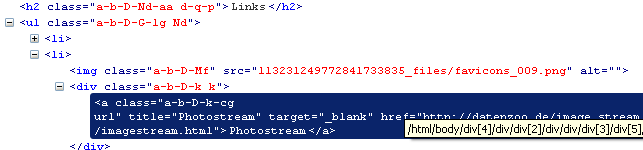

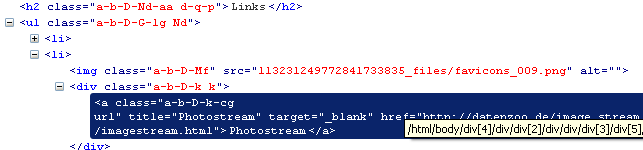

Web Page

1: <h2 class="a-b-D-Nd-aa d-q-p">Links</h2> 2: <ul class="a-b-D-G-lg Nd"> 3: <li><img alt="" class="a-b-D-Mf" src="113231249772841733835_files/favicons_007.png"> 4: <div class="a-b-D-k k"><a class="a-b-D-k-cg url" href="http://corion.net/" 5: ...

Find the related links

Find the related links

1: div.k a.url

2:

3: my @links = selector('div.k a.url');

4:

5: for my $link (@links) {

6: print $link->{innerHTML}, "\n";

7: print $link->{href}, "\n";

8: print "\n";

9: };

Live demo

1: 04-scrape-gplus-profile.pl

Find the "circled" contacts

Find the "circled" contacts

Find the "circled" contacts

Google is heavily set on JSON / dynamic Javascript

1: var OZ_initData = 2: ... 3: ,""] 4: ,1,,1] 5: ,[[41,[["113771019406524834799","/113771019406524834799",...,"Paul Boldra"] 6: ,["101868469434747579306","/101868469434747579306",...,"Curtis Poe"] 7: ,["114091227580471410039","/114091227580471410039",...,"James theorbtwo Mastros"] 8: ,["116195765101222598270","/116195765101222598270",...,"Michael Kröll"] 9: ...

Find the "circled" contacts

Google is heavily set on JSON / dynamic Javascript

1: var OZ_initData = 2: ... 3: ,""] 4: ,1,,1] 5: ,[[41,[["113771019406524834799","/113771019406524834799",...,"Paul Boldra"]

We want

1: OZ_initData["5"][3][0]

extraction from "native" JS

1: my ($info,$type)

2: = eval_in_page('OZ_initData["5"][3][0]');

3:

4: my $items = $info->[0];

5: print "$items accounts in Circles\n";

6: for my $i (@{ $info->[1] }) {

7: print $i->[0], "\t", $i->[3],"\n";

8: };

Live demo

1: demo/05-scrape-gplus-circle.pl

Summary

This example showed

-

Site/application navigation

-

Data extraction

-

from JSON / Javascript structures

-

WWW::Mechanize::Firefox

-

Maybe also WWW::Scripter

Building your own scraper

-

You know you want to

-

Navigate pages

-

Formulate extraction queries

-

Queries / Navigation as data or as (Perl) code?

Writing your own scraper

Look at and use other scrapers to find out what you need and what you don't like:

-

Configuration

-

Tradeoff - creation speed vs. environment

-

Navigation

-

Extraction

-

Speed

-

External support (Flash, Java, Javascript, ...)

Improving scraping stability and performance

-

Your bottleneck likely is network latency and network IO

-

Avoid navigation by going directly to the page with the data

1: http://en.wikipedia.org/wiki/Superman 2: http://en.wikipedia.org/wiki/$super_hero

-

Does not work nicely for Google+

1: https://plus.google.com/v/xXxXxXx

-

Avoid overloading the remote server

-

Reduce latency by running multiple scrapers at the same time (AnyEvent::HTTP)

-

Avoid overloading the remote server

Scraping Sites that don't want to be scraped

On the internet, nobody knows that you're a dog(strike)Perl script

-

Automate the browser

or

-

Send the same data as the browser

Scraping Sites that don't want to be scraped

Automate the browser

-

WWW::Mechanize::Firefox

-

Visual

-

Requires a terminal or VNC display

-

Portable Firefox to isolate

Scraping Sites that don't want to be scraped

Wireshark / Live HTTP Headers

-

Replicate what the browser sends

1: GET /-8RekFwZY8ug/AAAAAAAAAAI/AAAAAAAAAAA/7gmV6WFSVG8/photo.jpg?sz=80 HTTP/1.1 2: Host: lh5.googleusercontent.com 3: User-Agent: Mozilla/5.0 (Windows; U; ...

-

Differences

1: ->user_agent() 2: ->cookies() 3: 4: Accept-Encoding 5: ...

Summary

-

Many frameworks with different tradeoffs

-

Same tradeoffs for your framework

-

The network hides your program

-

Automate the browser

-

Mimic the browser

Sample code

All samples will go online at

Thank you

All samples will go online at

https://github.com/corion/www-mechanize-firefox

Questions?

Max Maischein (corion@cpan.org)

"Cognitive Hazard" by Anders Sandberg

Super hero images from Wikipedia

Bonus Section

Bonus Section

Formulating extraction queries

HTML::Selector::XPath - convert from CSS selectors to XPath

pQuery - jQuery-like extraction

1: $dom->q('p')->q('div')->...

Mojo::DOM - AUTOLOAD jQuery-like extraction

1: $dom->p->div->(...)

Web::Scraper - specific DSL

1: scrape { title => ...,

2: 'url[]' => scrape { ... }

3: }

Relevant Modules

Relevant Modules

Relevant Modules

Relevant Modules

Relevant Modules

Relevant Modules

Relevant Modules

Relevant Modules

Relevant Modules

Relevant Modules

Relevant Modules

Relevant Modules

Relevant Modules

Relevant Modules

Relevant Modules

Writing your own scraper

Reuse the work of others

Tatsuhiko Miyagawa

-

HTML::Selector::XPath - use CSS3 selectors

-

HTML::AutoPagerize - walk through paged results